A Python package & command-line tool to gather text on the Web#

Description#

Trafilatura is a Python package and command-line tool designed to gather text on the Web. It includes discovery, extraction and text processing components. Its main applications are web crawling, downloads, scraping, and extraction of main texts, metadata and comments. It aims at staying handy and modular: no database is required, the output can be converted to commonly used formats.

Going from raw HTML to essential parts can alleviate many problems related to text quality, first by avoiding the noise caused by recurring elements (headers, footers, links/blogroll etc.) and second by including information such as author and date in order to make sense of the data. The extractor tries to strike a balance between limiting noise (precision) and including all valid parts (recall). It also has to be robust and reasonably fast, it runs in production on millions of documents.

This tool can be useful for quantitative research in corpus linguistics, natural language processing, computational social science and beyond: it is relevant to anyone interested in data science, information extraction, text mining, and scraping-intensive use cases like search engine optimization, business analytics or information security.

Features#

- Advanced web crawling and text discovery:

Support for sitemaps (TXT, XML) and feeds (ATOM, JSON, RSS)

Smart crawling and URL management (filtering and deduplication)

- Parallel processing of online and offline input:

Live URLs, efficient and polite processing of download queues

Previously downloaded HTML files and parsed HTML trees

- Robust and configurable extraction of key elements:

Main text (common patterns and generic algorithms like jusText and readability)

Metadata (title, author, date, site name, categories and tags)

Formatting and structure: paragraphs, titles, lists, quotes, code, line breaks, in-line text formatting

Optional elements: comments, links, images, tables

- Multiple output formats:

Text (minimal formatting or Markdown)

CSV (with metadata)

JSON (with metadata)

XML or XML-TEI (with metadata, text formatting and page structure)

- Optional add-ons:

Language detection on extracted content

Graphical user interface (GUI)

Speed optimizations

- Actively maintained with support from the open-source community:

Regular updates, feature additions, and optimizations

Comprehensive documentation

Evaluation and alternatives#

Trafilatura consistently outperforms other open-source libraries in text extraction benchmarks, showcasing its efficiency and accuracy in extracting web content. The extractor tries to strike a balance between limiting noise and including all valid parts.

The benchmark section details alternatives and results, the evaluation readme describes how to reproduce the evaluation.

In a nutshell#

Primary installation method is with a Python package manager: pip install trafilatura (→ installation documentation).

With Python:

>>> import trafilatura

>>> downloaded = trafilatura.fetch_url('https://github.blog/2019-03-29-leader-spotlight-erin-spiceland/')

>>> trafilatura.extract(downloaded)

# outputs main content and comments as plain text ...

On the command-line:

$ trafilatura -u "https://github.blog/2019-03-29-leader-spotlight-erin-spiceland/"

# outputs main content and comments as plain text ...

For more see usage documentation and tutorials.

License#

This package is distributed under the Apache 2.0 license.

Versions prior to v1.8.0 are under GPLv3+ license.

Contributing#

Contributions of all kinds are welcome. Visit the Contributing page for more information. Bug reports can be filed on the dedicated issue page.

Many thanks to the contributors who extended the docs or submitted bug reports, features and bugfixes!

Context#

Extracting and pre-processing web texts to the exacting standards of scientific research presents a substantial challenge. These documentation pages also provide information on concepts behind data collection as well as practical tips on how to gather web texts (see tutorials).

Trafilatura is an Italian word for wire drawing symbolizing the refinement and conversion process. It is also the way shapes of pasta are formed.

Citing Trafilatura#

Trafilatura is widely used in the academic domain, chiefly for data acquisition. Here is how to cite it:

@inproceedings{barbaresi-2021-trafilatura,

title = {{Trafilatura: A Web Scraping Library and Command-Line Tool for Text Discovery and Extraction}},

author = "Barbaresi, Adrien",

booktitle = "Proceedings of the Joint Conference of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing: System Demonstrations",

pages = "122--131",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2021.acl-demo.15",

year = 2021,

}

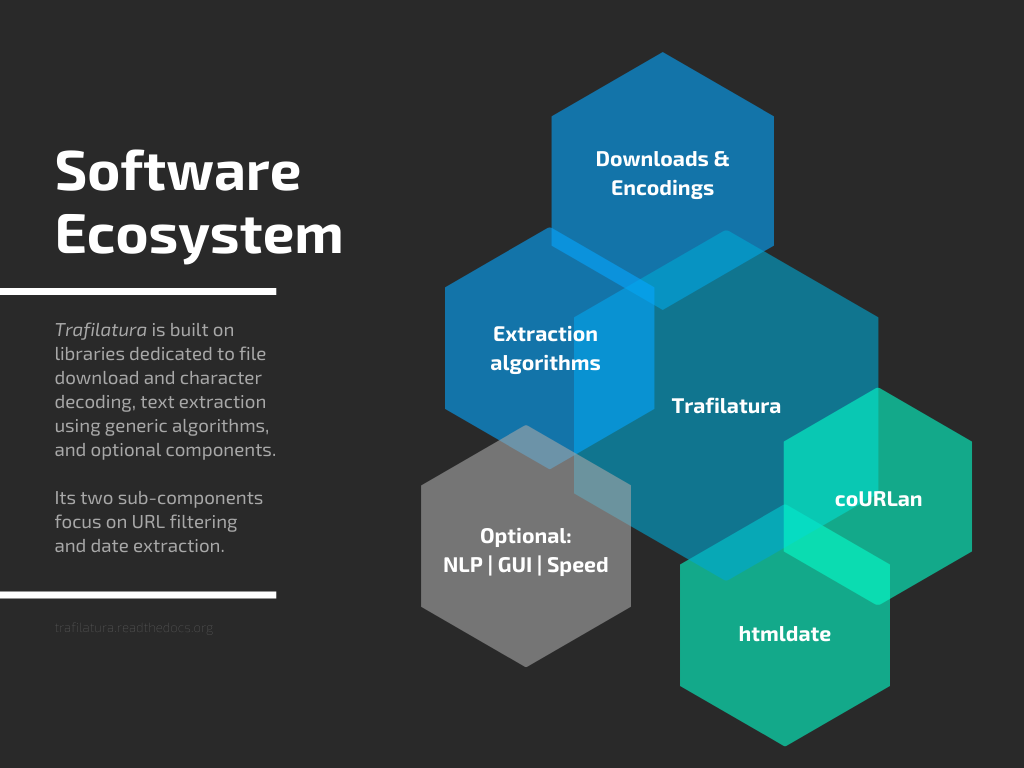

Software ecosystem#

Case studies and publications are listed on the Used By documentation page.

Jointly developed plugins and additional packages also contribute to the field of web data extraction and analysis:

Corresponding posts on Bits of Language (blog).